Radio companies have been using artificial intelligence for years in scheduling software, recommendation engines and speech recognition, but now the pace of change has accelerated and the potential for artificial intelligence in radio has made another huge leap with the development of Generative AI.

A range of speakers gave insights into the new playing field at last month’s RadioDays Europe conference, pointing out how linking all the new technologies together is already changing how radio companies operate and strategise about their futures.

Any of the new developments is innovative in itself, but the potential for the biggest change lies in combining all of them.

The most common scenario will be the creation of new content for news or radio presentation by combining all the tools. It works like this:

• Speech to text engines turn your audio into written text

• The text of your content in fed to a Generative AI engine such as ChatGPT

• The Generative AI engine analyses the content and learns syntax, style and sentence structures for your station

• When asked to create a news story or something interesting to talk about, it scans the internet for information about that topic then structures it using what is has learnt about your station’s style, machine learning is used within the service to generate the best content, not just the first thing that is found

• The output of the Generative AI is fed to a voice synthesis engine to turn it from text to speech

• A voice synthesiser reads the text to the listener, applying compression and other AI driven audio processing tools to make it sound right for the listener

• If your listener is interacting with the content on a smart speaker, the listener can ask questions and the AI interaction engine can respond in real time with more information, or ask the listener some questions to see if they want to hear more information or related stories.

Several experts think that combining these new AI tools with smart speakers could mean the end of Google and the advent of a new type of search, powered by voice and smart speakers.

Satu Keto from YLE’s Radio Technology and Development unit and Anna Vosalikova, from Czech Radio told conference delegates how the Finish and Czech national broadcaster has been playing with this technology, generating AI-created crime fiction podcasts, horror stories, interactive audio dramas, news reports and website content.

“For media the future is no longer about transformation to digital… we have already done that. The transformation is now the transformation of digital,” said Satu Keto.

While learning about the possibilities from their experiments, the speakers also pointed out that they have learnt that there will be many issues to be considered by responsible broadcasters, such as being transparent by telling your audience that you are using AI and also warning the audience about the possibility of scams using deep fake videos and counterfiet voices. “Experimentation in 2021 showed us how easy it is to steal a person’s voice that can be used in hoaxes, but we saw how the technology can also be used to better interact with your listeners using a digital twin.

“We have to play with these tools to understand how they can help us concentrate on the work we do… there are transparency and legal issues to discuss, to ensure trust remains strong with your audience… We need to be able to authenticate ourselves for our audience.”

In recent years we have seen how unscrupulous fraudsters have faked radio social media accounts, so imagine if they begin to do that with voice and video fakes. In Europe, governments are already considering these issues and are expected to lead in regulations about how this technology should be used, just as they did with GDPR regulations for the internet. Responsible broadcasters will have an important role to play as societies consider the implications of the latest AI tools.

Contextual Targeting

For advertisers and listeners there will be many benefits of some of the new tools, which will solve some of the privacy problems that are associated with tracking. One of these is Contextual Targeting for improved recommendations.

By using speech to text recognition engines on radio shows and podcasts, intelligent podcast and streaming platforms will be able to more accurately present content to listeners without having to know anything about them except what they previously listened to. Currently, recommendation engines are not very smart, they rely on people tagging or categorising their program or podcast and those categorisation tools are matched to the tags or categories of the previous show they listened to to recommend ‘if you liked that, you may like this.’ But those recommendations are often wildly inaccurate.

Contextual targeting uses speech to text tools to analyse all the sentences spoken in a podcast or catchup radio show and work out what was actually being discussed in detail, then matches that to the actual content of the show you just listened to, giving you a closer match of content more likely to be of interest to you.

A white paper by Sounder, discusses the way advertising can benefit from this technology without having to use any of the listener’s personal data, just whatever they listened to. Advertisers can then buy by listener interest rather than general categories or listener demographics. The Sounder whitepaper explains:

“Contextual targeting delivers ads based on content relevancy, as opposed to audience targeting, which targets specific types of users regardless of the content they are consuming at the time of receiving the ad. By analyzing a show’s episodes and content to identify contextual targeting opportunities, instead of relying only on podcast metadata like Apple’s genres, media buyers can more confidently find opportunities with higher relevance to a brand’s needs… the contextual evaluation of inventory can include more podcasts than ever before and significantly increase the amount of content available for advertisers.”

Audience Research

Radio Analyzer has been around for a while, but its latest offerings include audience research tools that map audience people meter ratings data to the program clock and the broadcast audio, giving a visual guide as to which segments of a program worked well or did badly with the audience.

By using minute-by-minute analysis, the measurement tool is able to accurately conclude what engages listeners and what programmers, producers and presenters can do to keep them entertained.

Some takeaways from analysis of personality radio shows demonstrated at the conference included:

• Irrelevant weather reports lose listeners, make the reports useful to listeners by telling them to bring an umbrella or dress warmly, make the weather report information they can use, not just another program segment

• Talk over the end of songs to transition listeners from the music they like into the talk

• Emotions such as crying or laughing engage listeners

• Sport results are boring for listeners, talk more about unique sport news items not just about the results

• Don’t put too many topics into your morning show, use a few topics and keep coming back to them in new ways every hour

• Across the day, talking about the same topic does less well after it is first heard. Listeners feel they have already heard it and don’t pay attention or turn off, so, if you do return to a topic, explain how it is updated as you introduce it

• Go straight to the point in each topic to catch the audience quickly

Journalism

Turning written news stories into audio, or audio stories into web reports is increasingly common in newsrooms as AI is used to keep the angle and basic facts of a story but format it differently for television, radio or the web. It saves journalists having the create three different versions of their stories for each medium by reformatting the story differently for each medium. Of course, journalists in responsible media organisations still check their work for each platform before publishing.

Marianne Bouchart, the founder of a nonprofit organisation promoting news innovation said:

“Don’t trust robots to make decisions, but use them to help you gather data. Make your own editorial decisions. Be ethical and tell your audience what you are doing… transparency is necessary.”

AI Monitoring tools can watch social media sites for key words and images. For instance, AI analysis of TikTok in Russia found that TikToks mentioning the word ‘war’ or displaying pictures of soldiers with guns were not shown in Russia at the beginning of the Ukraine invasion.

Still confused about AI?

Morna McAulay, a data transformation and strategy leader explained that artificial intelligence is Data + Algorithm = Output. It is good for tracking and analysis but does not create significant original content in itself.

Machine Learning is the next evolution of AI and that is what drives services such as ChatGPT.

Machine learning creates the algorithm, not just the output, Data + Output = Algorithm. Because it creates a feedback loop it allows the AI to continue to learn… it refines what it outputs.

Some of the new tools discussed by various presenters at the conference include:

RadioGPT – www.futurimedia.com/radiogpt

Audio processing AI tools –

https://auphonic.com/features/leveler

www.cleanvoice.ai

Audience data matching and sentiment analysis – www.podderapp.com

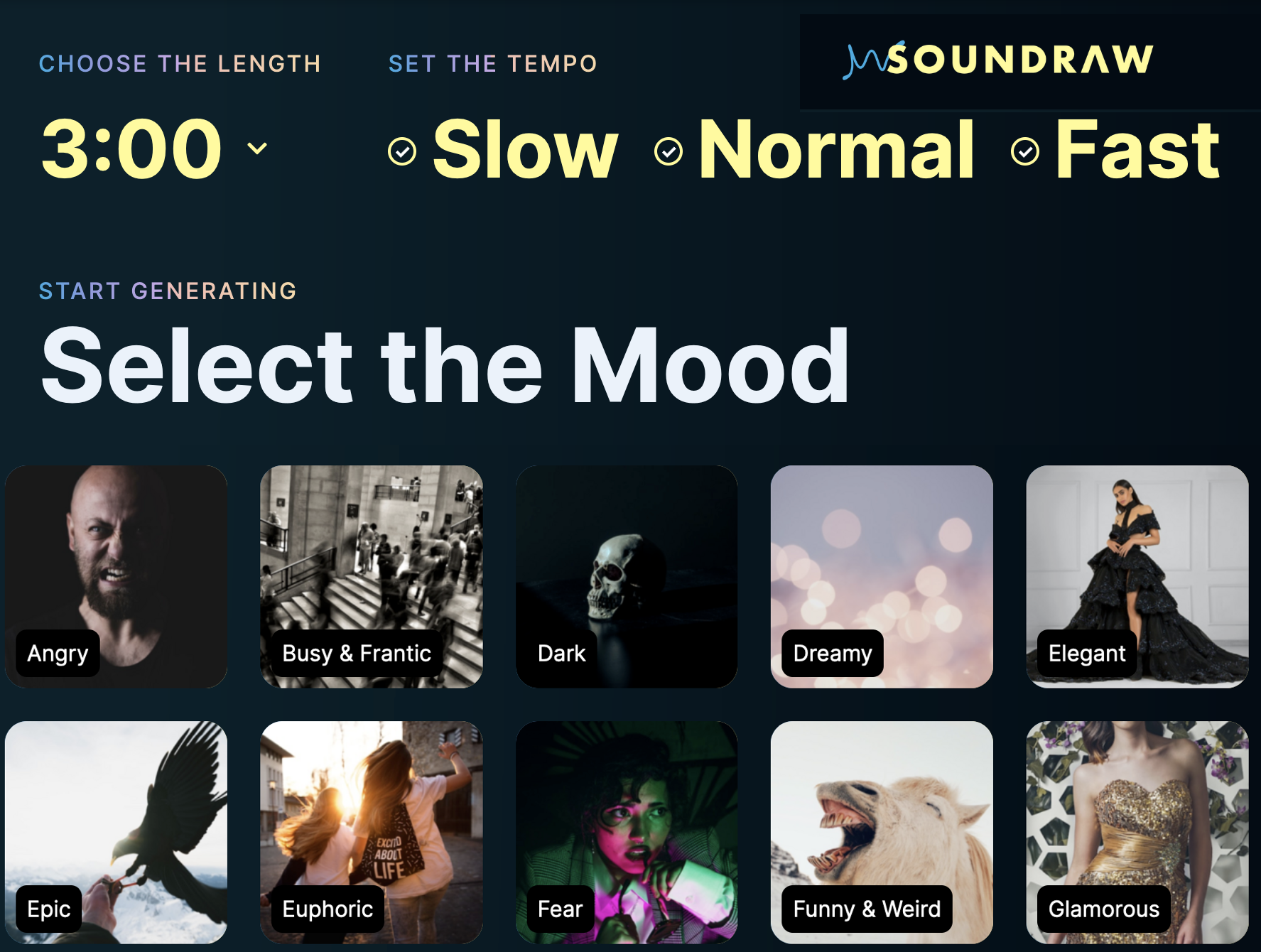

AI generated, rights free production music (main picture) – https://soundraw.io/create_music

Investigative fact checking tool for journalists used by Radio France – https://mbnuijten.com/statcheck

Secure speech to transcription service – https://www.mygoodtape.com

AI generated and edited videos –

AI Generated graphics – https://aistudios.com

AI Generated voices –